Low-code tools are going mainstream

Purus suspendisse a ornare non erat pellentesque arcu mi arcu eget tortor eu praesent curabitur porttitor ultrices sit sit amet purus urna enim eget. Habitant massa lectus tristique dictum lacus in bibendum. Velit ut viverra feugiat dui eu nisl sit massa viverra sed vitae nec sed. Nunc ornare consequat massa sagittis pellentesque tincidunt vel lacus integer risu.

- Vitae et erat tincidunt sed orci eget egestas facilisis amet ornare

- Sollicitudin integer velit aliquet viverra urna orci semper velit dolor sit amet

- Vitae quis ut luctus lobortis urna adipiscing bibendum

- Vitae quis ut luctus lobortis urna adipiscing bibendum

Multilingual NLP will grow

Mauris posuere arcu lectus congue. Sed eget semper mollis felis ante. Congue risus vulputate nunc porttitor dignissim cursus viverra quis. Condimentum nisl ut sed diam lacus sed. Cursus hac massa amet cursus diam. Consequat sodales non nulla ac id bibendum eu justo condimentum. Arcu elementum non suscipit amet vitae. Consectetur penatibus diam enim eget arcu et ut a congue arcu.

Combining supervised and unsupervised machine learning methods

Vitae vitae sollicitudin diam sed. Aliquam tellus libero a velit quam ut suscipit. Vitae adipiscing amet faucibus nec in ut. Tortor nulla aliquam commodo sit ultricies a nunc ultrices consectetur. Nibh magna arcu blandit quisque. In lorem sit turpis interdum facilisi.

- Dolor duis lorem enim eu turpis potenti nulla laoreet volutpat semper sed.

- Lorem a eget blandit ac neque amet amet non dapibus pulvinar.

- Pellentesque non integer ac id imperdiet blandit sit bibendum.

- Sit leo lorem elementum vitae faucibus quam feugiat hendrerit lectus.

Automating customer service: Tagging tickets and new era of chatbots

Vitae vitae sollicitudin diam sed. Aliquam tellus libero a velit quam ut suscipit. Vitae adipiscing amet faucibus nec in ut. Tortor nulla aliquam commodo sit ultricies a nunc ultrices consectetur. Nibh magna arcu blandit quisque. In lorem sit turpis interdum facilisi.

“Nisi consectetur velit bibendum a convallis arcu morbi lectus aecenas ultrices massa vel ut ultricies lectus elit arcu non id mattis libero amet mattis congue ipsum nibh odio in lacinia non”

Detecting fake news and cyber-bullying

Nunc ut facilisi volutpat neque est diam id sem erat aliquam elementum dolor tortor commodo et massa dictumst egestas tempor duis eget odio eu egestas nec amet suscipit posuere fames ded tortor ac ut fermentum odio ut amet urna posuere ligula volutpat cursus enim libero libero pretium faucibus nunc arcu mauris sed scelerisque cursus felis arcu sed aenean pharetra vitae suspendisse ac.

Why differential privacy (DP)?

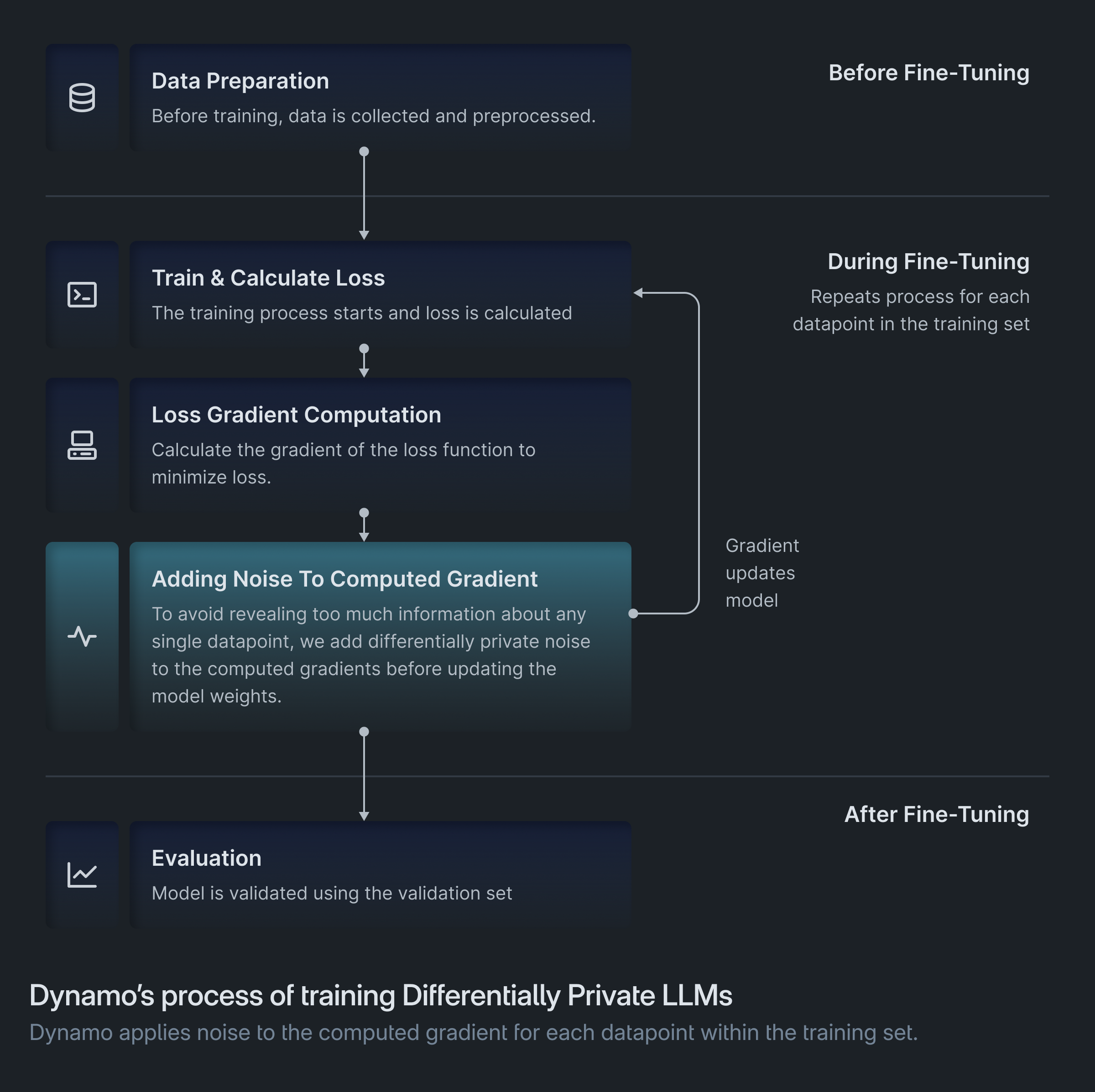

Recent research shows that large language models (LLMs) are often prone to memorizing their training and fine-tuning datasets. This is a vulnerability that can be exploited by adversarial attacks, where malicious actors craft specific prompts to extract sensitive information from these models.

For organizations developing and deploying LLMs, this presents a significant risk to data security and privacy. Differential privacy (DP) helps mitigate this risk by strategically injecting statistical noise during the training process. This technique controls the risk of data memorization, while balancing privacy and performance.

Given its effectiveness, differential privacy is being closely examined by federal agencies as a key defense against adversarial attacks and data leakage in LLMs. The National Institute of Standards and Technology (NIST), which developed the widely used NIST AI Risk Management Framework, endorsed differential privacy as the most reliable method for ensuring robust privacy protection against both known and future attacks, even with multiple data releases.

Other government organizations, like the U.S. Census Bureau, are starting to adopt differential privacy as core component of their data protection strategies. To expand the use of privacy-preserving machine learning, it's crucial to develop differential privacy solutions that can efficiently handle large datasets and complex applications.

“Differential privacy is currently the best known method for providing robust privacy protection against known and future attacks, even in the face of multiple data releases.” — The National Institute of Standards and Technology (NIST)

Challenges in adopting differential privacy for LLMs

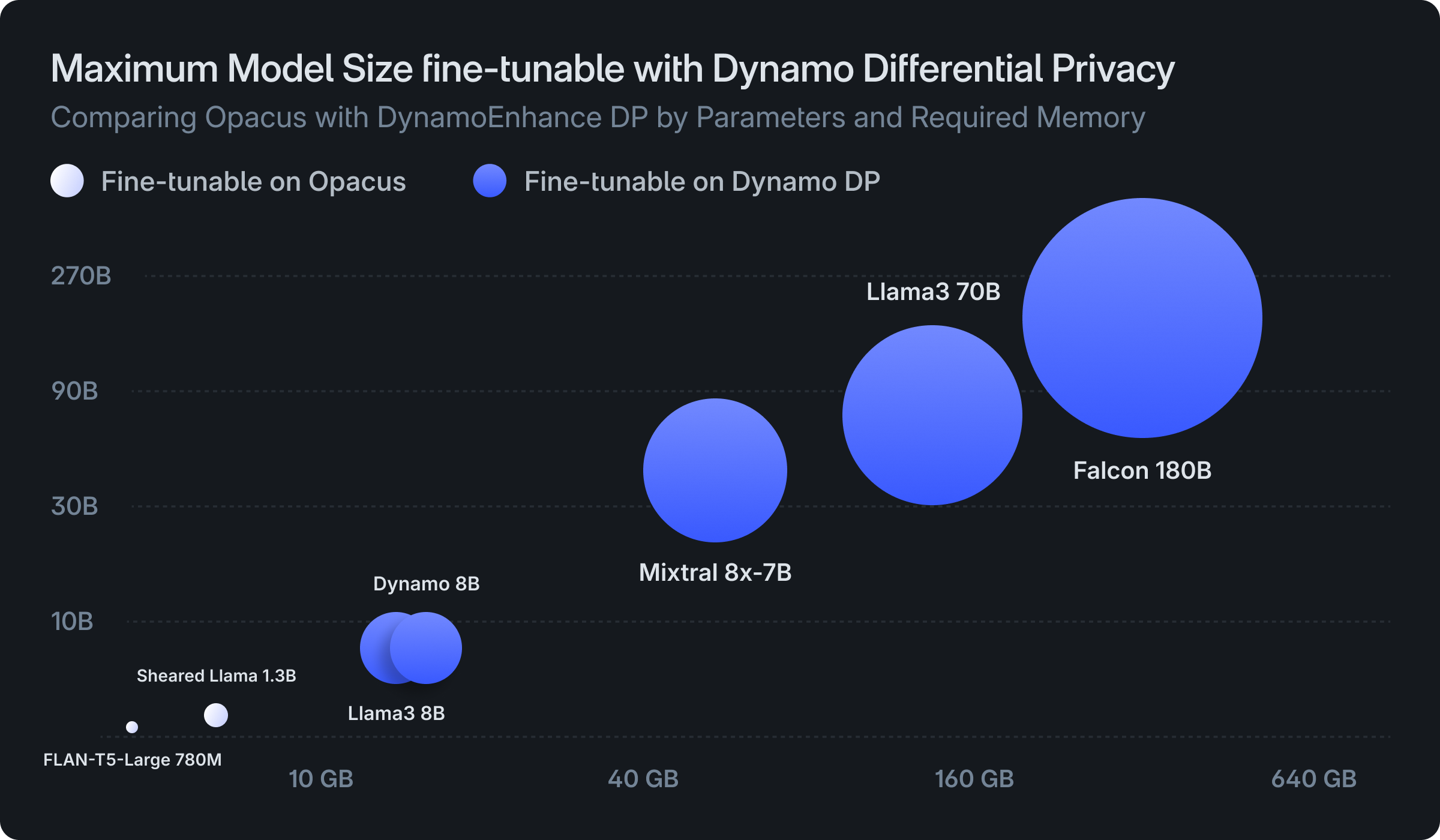

Despite its promise for safeguarding LLMs, differential privacy adoption has faced significant challenges. The sheer magnitude of LLMs, some of which have trillions of parameters, poses significant hurdles for engineers.

The traditional Differentially-Private Stochastic Gradient Descent (DP-SGD), which computes individual, per-sample gradients, significantly slows down training compared to standard neural network methods. This is because DP-SGD loses the parallel processing benefits of GPUs, resulting in longer training times and higher GPU memory requirements.

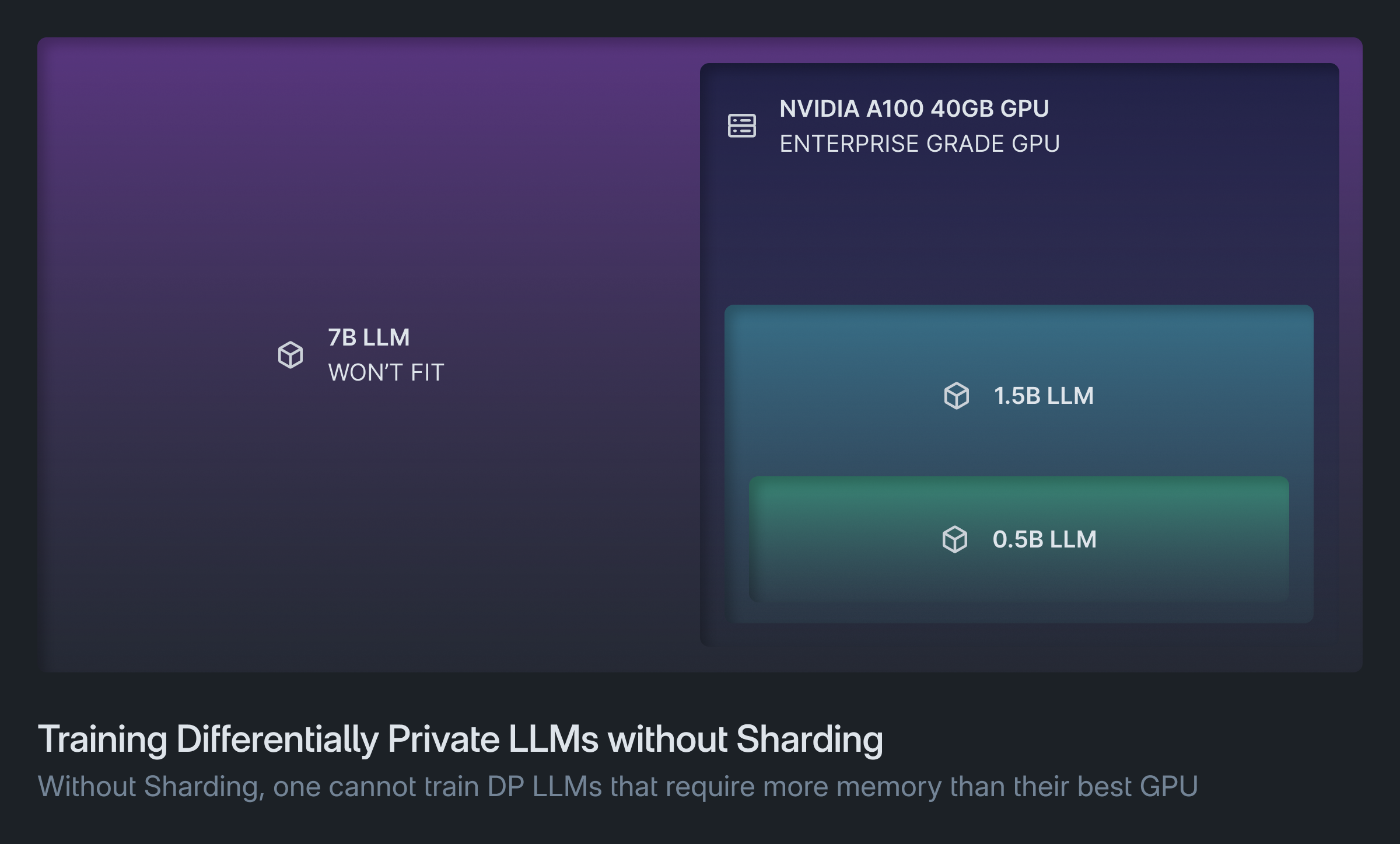

he previous state-of-the-art in differentially private fine-tuning struggled with models exceeding approximately 1.5 billion parameters. Practitioners struggled with limited throughput and extremely long training durations. The memory constraints of these methods made it challenging to train on anything other than high-end GPUs, like the A100 (40GB, 80GB), resulting in costly and complex implementation.

Moreover, current differential privacy frameworks, such as the Opacus library, aren't well-stuied for large LLM workloads. While Opacus supports Distributed Data Parallel (DDP) training, it lacks model sharding capabilities.

DDP replicates the entire model on each GPU, which can lead to memory constraints when handling large models. This limitation made it difficult or nearly impossible to train LLMs with billions of parameters efficiently across multiple GPUs. As a result, the lack of model sharding in Opacus has hindered the scalability and practicality of differentially private training for large-scale deep learning models.

Apply differential privacy at scale with DynamoEnhance

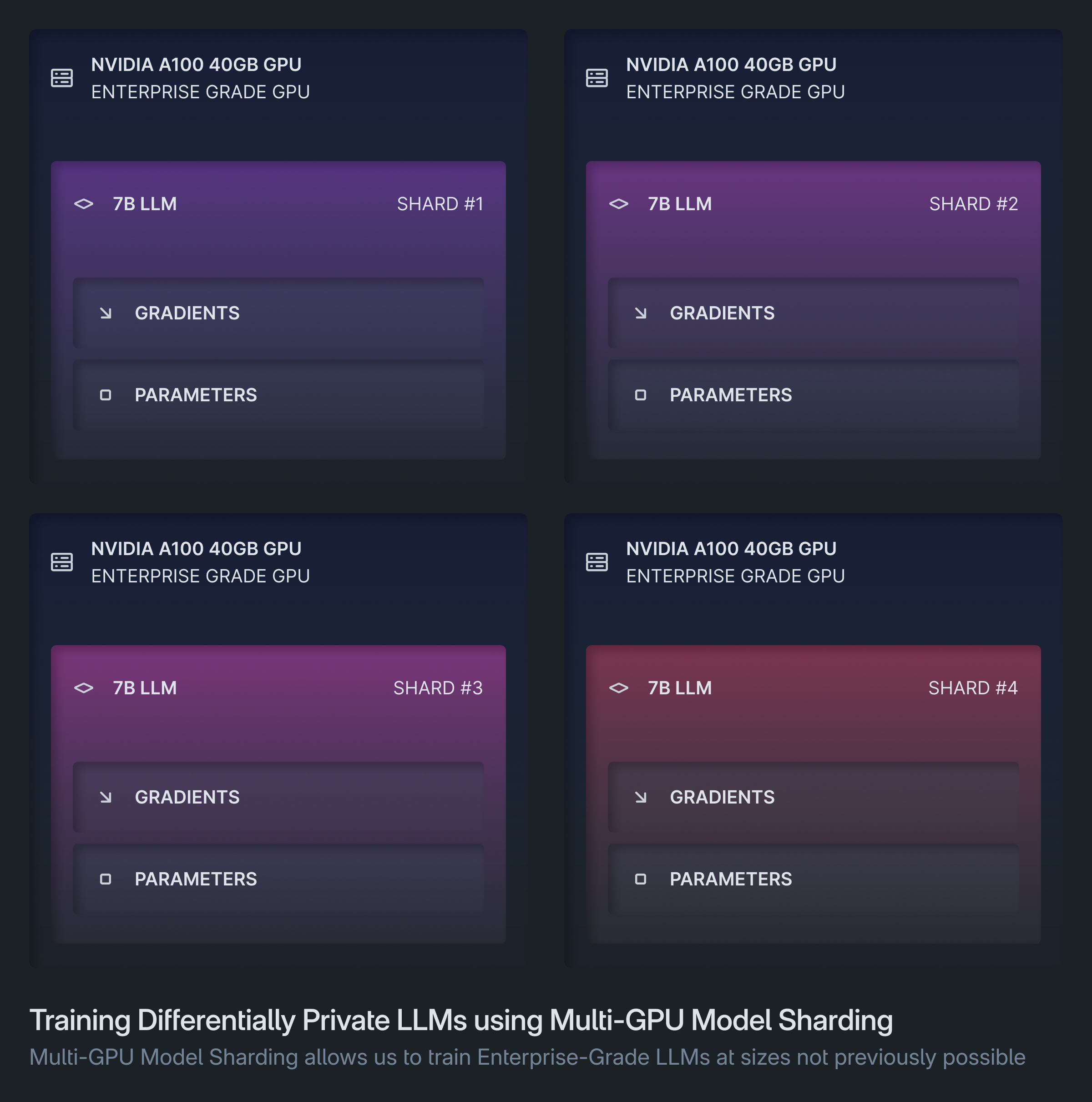

Bu et al. developed a new approach called DP-ZeRO to enable large-scale differentially private deep learning using the DeepSpeed library. DeepSpeed, known for its Zero Redundancy Optimizer (ZeRO), enhances training speed and reduces memory usage when working with large models across multiple GPUs. The researchers have extended DeepSpeed to support differentially private training, proving that effective privacy injection is achievable with the right techniques.

DP-ZeRO opens up exciting opportunities for Dynamo AI to build upon the work and integrate scalable differential privacy in DynamoEnhance. By leveraging DeepSpeed's multi-GPU model sharding capabilities and incorporating differential privacy into the distributed training process, DynamoEnhance offers enhanced data protection and privacy without sacrificing the power of large-scale models.

This is where we come in. DynamoEnhance’s MultiGPU privacy framework, built on the DeepSpeed library, seamlessly integrates differential privacy. It features user-friendly Trainers inspired by popular transformers and TRL (Transformer Reinforcement library) libraries, making advanced privacy protection accessible while optimizing model performance.

from dynamofl.privacy import DPTrainer, PrivacyArguments

# model, tokenizer = ...

# train_dataset, eval_dataset = ...

privacy_args = PrivacyArguments(target_epsilon=1.0)

trainer = DPTrainer(

model=model,

tokenizer=tokenizer,

args=train_args,

privacy_args=privacy_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset

)

trainer.train()In the above example, we set the target epsilon value in our PrivacyArguments, where Epsilon represents the “privacy budget.” A lower epsilon value indicates less privacy expenditure, resulting in more noise being added to the gradients. Conversely, a higher epsilon value means a larger privacy budget and less noice to the gradients, offering reduced privacy protection.

By leveraging DeepSpeed and incorporating innovative techniques, DynamoEnhance enables efficient, scalable training of LLMs while maintaining robust privacy guarantees and accommodating larger batch sizes.

This cutting-edge approach differentiates our solution by providing enterprise customers with an effective and easy-to-use approach to safeguarding sensitive data with differential privacy, while harnessing the power of LLMs.

Our technology supports MultiGPU model sharding in ways not previously achievable with existing differential privacy libraries. The DynamoEnhance MultiGPU Differential Privacy SDK is compatible with popular training libraries and methods, including Hugging Face, mixed precision, quantized training like BitsAndBytes, Mixture of Quantization (MoQ), LoRA fine-tuning, flash attention, and accelerate. We support leading LLMs such as Llama-70B, Mistral-8x7B, and more.

Empowering enterprise customers with differential privacy

At Dynamo AI, our mission is to empower enterprise customers with the tools and knowledge necessary to unlock the potential of differential privacy. We offer comprehensive documentation and QuickStart guides that enable users to effortlessly experiment with differential privacy fine-tuning of LLMs, regardless of their technical expertise.

By prioritizing accessibility and usability, we aim to make privacy-enhancing technologies available to a broader audience, beyond just those with a formal background in privacy-preserving machine learning.

As LLMs become more powerful and prevalent, the risk of exposing sensitive information from training datasets increases. Dynamo AI provides comprehensive privacy solutions that help teams effectively measure, address, and prevent data leakage, ensuring the responsible deployment and use of LLMs while protecting sensitive information.

We also offer a range of AI privacy and security solutions to help you build trustworthy and responsible AI systems. To learn more about Dynamo AI and to explore our privacy and security offerings, request a demo today.